- nnenna hacks

- Posts

- How to Preserve Understanding When AI 10x's Your Code Output

How to Preserve Understanding When AI 10x's Your Code Output

AI increased output. But output =/= clarity.

Here's how to prevent yourself (and your team) from drowning in code you can't defend.

We can all see the fundamental shifts happening with the influx of AI in software development.

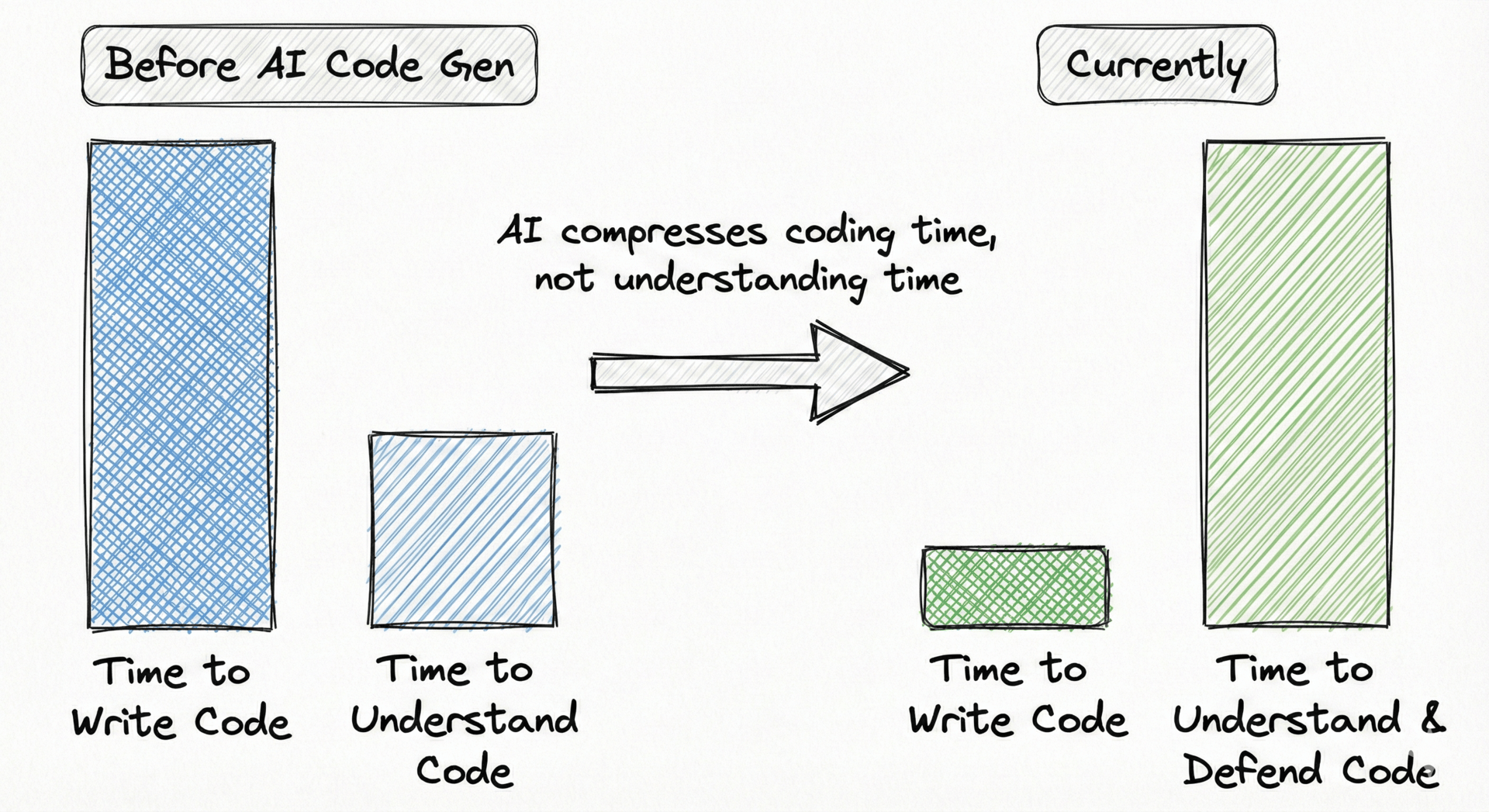

For decades, the constraint was obvious: the time it took to write code, the architectural decisions, planning, coordination, triaging, etc.

But some of these constraints are loosening aggressively.

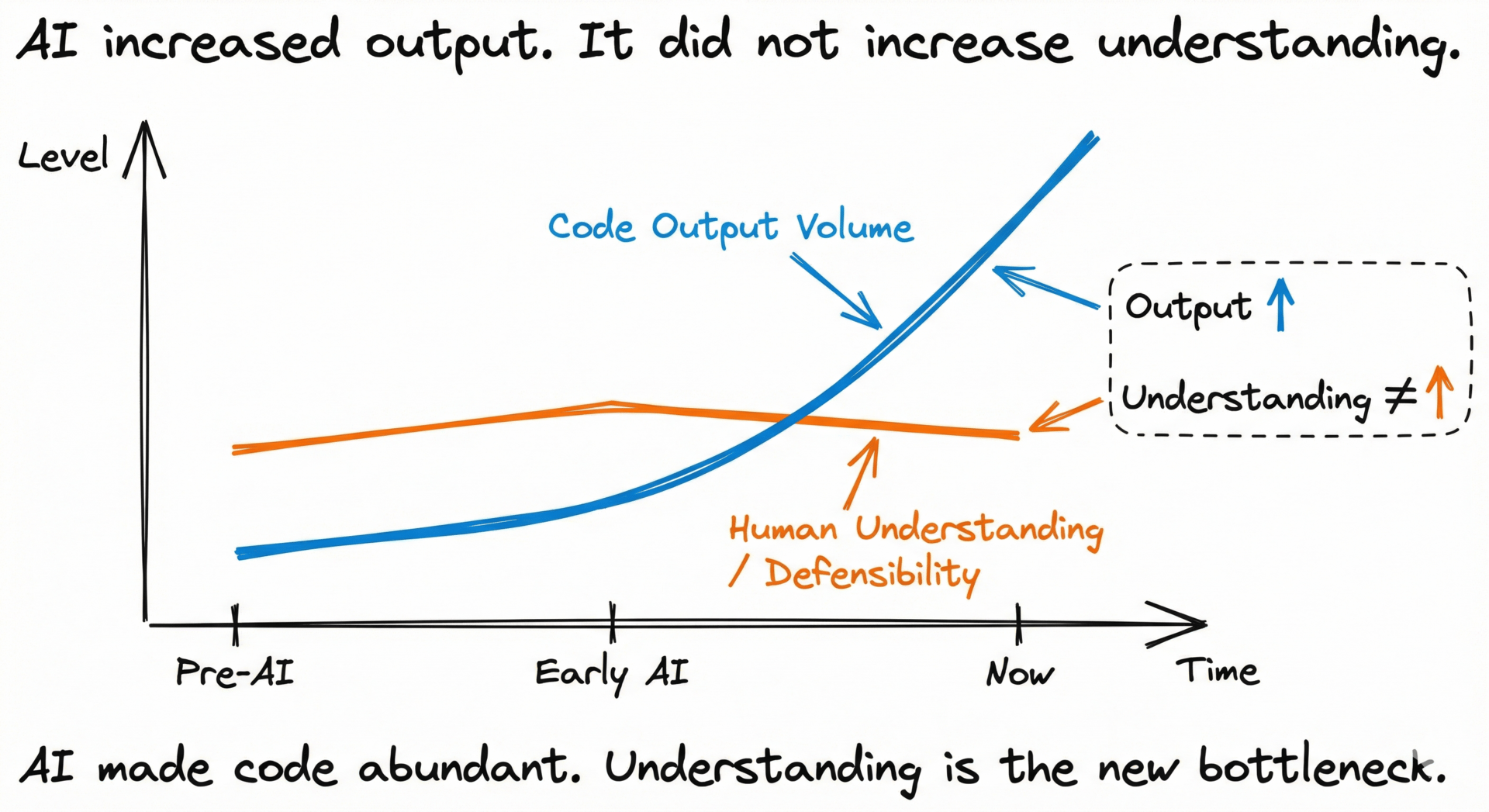

Code is now abundant, generation is nearly instant, and iteration is certainly cheap(er). But you should understand something very clearly: we are surrounding ourselves by systems at risk of becoming completely opaque if we don't pay attention.

This is what happens when you optimize for output and mistake it for progress.

A big challenge right now is about preserving understanding as code generation accelerates beyond comprehension and cognitive load.

AI solves production, not meaning

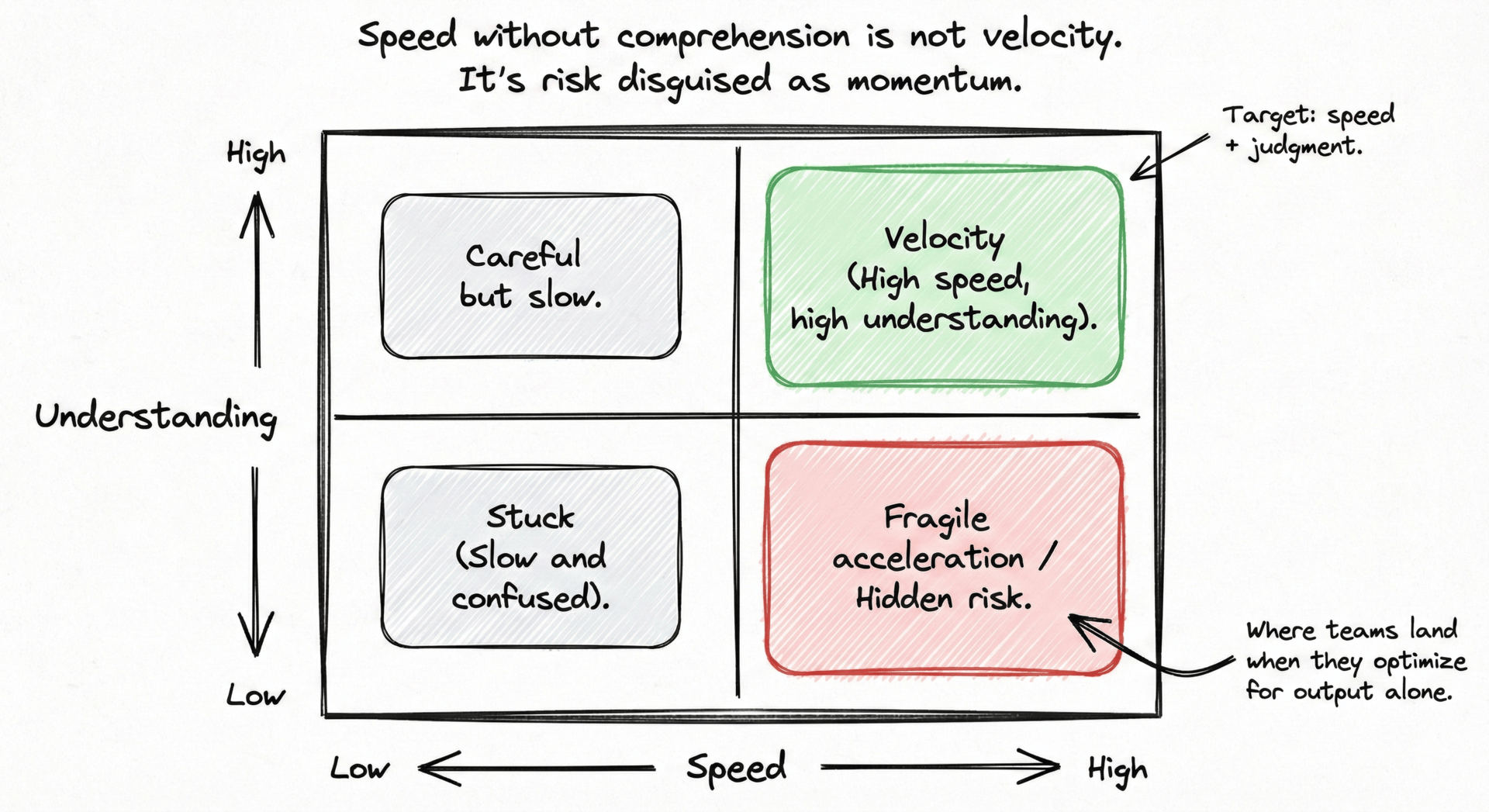

Speed without comprehension is not velocity. It's risk disguised as momentum.

AI makes it effortless to generate code without fully understanding intent, assumptions, or downstream consequences.

This means the work has shifted. To where someone must stand behind what was built in the first place.

When generating a thousand lines of code takes seconds and interpreting the output takes hours, understanding becomes the bottleneck.

AI optimized generation. It doesn't directly optimize discernment.

As Martin Fowler notes on GenAI code:

"I absolutely still care about the code," weighing impact, probability of failure, and detectability—especially where AI hallucinations risk high-stakes systems.

The bottleneck is human judgment applied at the right moments.

What understanding requires now

Understanding is now something far more demanding and not very automation-friendly:

Why does this code exist at all? Not what it does, but why it was necessary in the first place.

What assumptions does it encode? What did the system believe to be true when those decisions were made?

How does it behave as part of a larger system? Not in isolation, but under load, under stress, when dependencies fail.

What risks does it introduce? Including the ones that remain invisible until scale exposes them.

Who is accountable when it fails? Because failure is inevitable, and responsibility cannot be abstracted away.

Can you defend this decision under scrutiny? Not just explain it, but justify why this approach over alternatives, based on judgment, not convenience.

You are no longer constrained by what you can build. You are constrained by what you can defend.

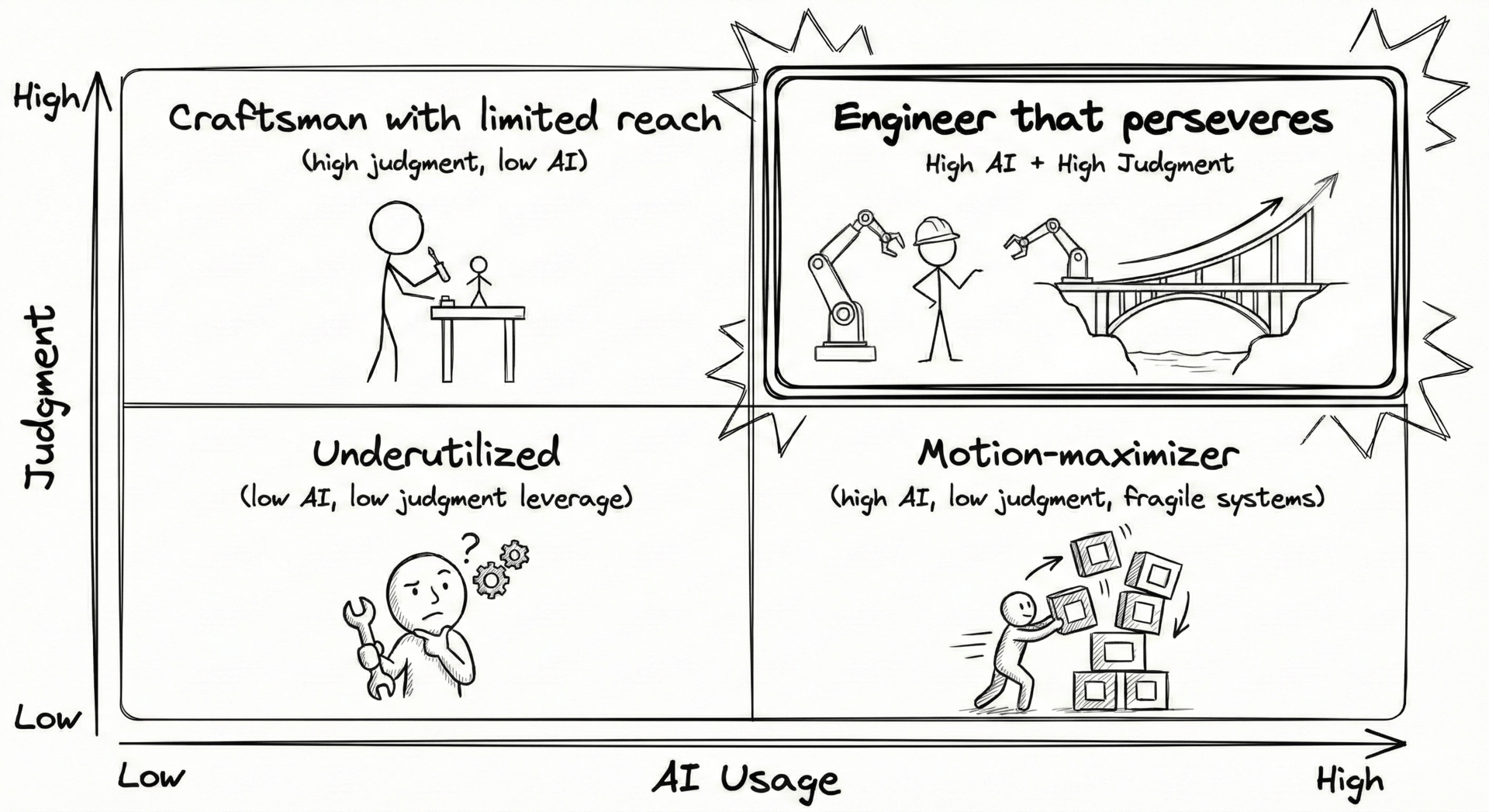

Judgment is now your core leverage

AI will make judgment scarce in the market. But scarcity creates value.

When code can be generated instantly, a rare resource is the discernment to know:

which code deserves to exist?

which trade-offs are acceptable?

which risks are worth taking?

which shortcuts will become technical debt?

Kent Beck observes juniors thriving with AI not via "vibe coding," but "augmented coding" that accelerates learning while maintaining quality through rigorous evaluation.

Judgment can't be automated. It can only be deepened, preserved, or eroded.

“AI is actually great at helping you learn… [but] if you’ve outsourced all of your thinking to computers… you’ve stopped upskilling.”

– Jeremy Howard, fast.ai founder

If you accept AI-generated code without exercising judgment, you are training yourself - and your team - to defer responsibility to the algorithm.

3 questions to ask for clarity under speed

If understanding is now the bottleneck, the work is about preserving clarity as acceleration increases.

Here are three questions every professional developer should use when shipping AI-assisted code.

Do not treat these as bureaucratic checkboxes. They are safeguards that expose whether you are building systems with intention or assembling fragility.

1: The Intent Question

Can someone explain why this code/feature exists in one sentence?

Not what it does. Why it needed to exist at all.

If the answer is:

"The AI suggested it"

"It seemed like a good idea"

"We needed to ship fast"

Anything vague, circular, or deferred

Then intent has already been lost.

When intent is unclear, hidden risk is embedded in the system.

How to apply this:

Connect your AI dev workflow end-to-end.

- From your project management tool to design tool to PR. The trail of evidence is traceability of intent. Everyone's stack is different. But it's worth connecting the dots however you can.If you cannot articulate the problem clearly, you do not understand the solution.

If no one on your team can explain why this code exists, question if it should exist.

2: The System Question

Can someone explain how this changes system behavior, not just what it does locally?

Does it increase coupling between components? Does it introduce new failure modes? Does it depend on assumptions that may not hold at scale or under different conditions?

If impact cannot be traced beyond the immediate change, you are not moving with direction. You are moving blind.

How to apply this:

Use tools that visually map dependencies for your reference. Upstream + downstream visibility.

Ask: "What breaks if my assumption becomes false?"

Trace how code generally interacts with the rest of the system, not just within its own module

If no one can explain second-order effects, slow down until someone can

3: The Ownership Question

Can someone name who is accountable when this fails?

Not "the team." Not "we'll figure it out later." Not a Slack channel or a future retro session.

A specific person who:

understands the decision

can defend the trade-offs

will own the consequences when it breaks

Ownership without judgment is meaningless. The person accountable must have exercised independent judgment about whether this code should exist, not simply approved what the AI suggested.

When ownership dissolves, understanding has already left the system. And what remains is hope. Hope is neither a system nor strategy in this line of work. Especially with AI's nondeterminism in the mix.

How to apply this:

Make accountability visible in your code review process

If no one is willing to put their name on a decision, it is not ready to ship

6 practical strategies to protect understanding at scale

Beyond the three questions, here are concrete practices you can implement immediately to preserve understanding as AI accelerates output.

Strategy 1: Scale Judgment with AI Code Review

AI code review addresses the review boundary by providing context-aware analysis that surfaces intent gaps, system risks, and compliance issues before human eyes touch it.

This functions as a force-multiplier for judgment, not a replacement of it. This is the quality gate giving you an opportunity to internalize changes as code moves through the lifecycle.

Strategy 2: Design Constraints Before You Accelerate

Speed multiplies what already exists. If you accelerate without constraints, you multiply chaos.

Before increasing AI use, establish:

what types of code AI can generate without review

what types require enhanced human review

what types AI should not touch at all

Constraints are the elements that make speed sustainable with AI.

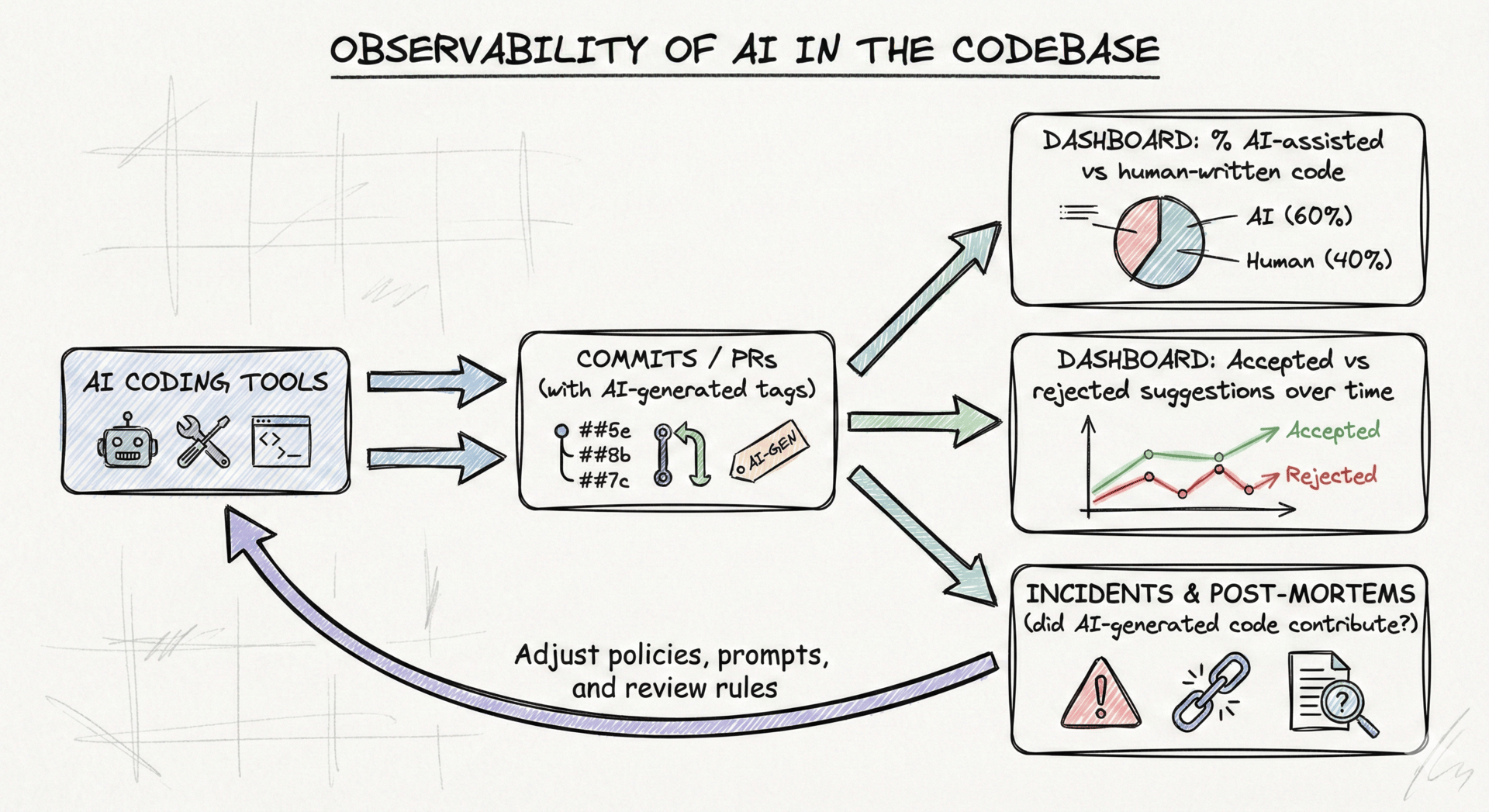

Strategy 3: Build Observability Into AI Workflows

If you cannot see how AI is changing your codebase, you cannot manage it.

Implement or leverage:

Tagging or labeling for AI-generated code (many AI code tools do this automatically per commit and PR)

Monitor for how much of your codebase is AI-assisted vs human-written at a high level (code contributions exist in Github and other tools)

Track which AI code review suggestions are accepted vs. rejected and why

Retrospective meetings where you surface AI coding sentiment

Post-mortems examining whether AI-generated code contributed to (particular) incidents

You cannot improve what you do not measure. Make AI's impact visible.

Strategy 4: Preserve Institutional Memory

AI does not have awareness in between sessions. You have the awareness, connections, and memory. Leverage this.

Document:

Why certain approaches were tried and abandoned

What assumptions were tested and found invalid

Which trade-offs were consciously accepted and which were accidents

How systems are supposed to behave, not just how they currently behave

These can be ADRs (architectural decision records) or something akin to that. Simple documentation. Markdown for easy AI consumption if that's easiest.

When deeper understanding and context only lives in our minds, it disappears when people leave. When it lives in documentation, it compounds.

In Developer Experience, they call this "teachable, repeatable, reportable".

Knowledge silos weren't great before, and they'll be worse now with AI. Document things.

Strategy 5: Slow Down Where It Matters Most

Not all code deserves the same level of scrutiny. High-impact changes require higher investment in understanding.

Apply asymmetric rigor:

Security boundaries: maximum scrutiny, start with minimal AI autonomy

Business logic: high scrutiny, AI assistance with human validation

Formatting and style: minimal scrutiny, explore AI autonomy

Speed is valuable when applied strategically.

Strategy 6: Treat Judgment as a Muscle That Atrophies

The more you defer to AI without questioning its output, the weaker your judgment becomes.

Protect your judgment capacity:

Review AI suggestions you rejected and validate your reasoning.

- Document it. Comments left in PRs in Github could be easiest! You can always pull this down to your local machine and generate a retro report on any patterns that surface.Practice explaining why alternative approaches would have been worse

Mentor others by demonstrating judgment in code review, not just pointing out issues (engineering enablement)

Maintain space for technical disagreement - judgment strengthens through exercise, not consensus

If your team stops questioning AI output, they have not become more efficient. They have outsourced their most valuable capability.

Consider this an opportunity to elevate your skills.

Even skeptics like DHH (Ruby on Rails creator) admit AI can't yet match junior devs fully, underscoring why tools must amplify human review.

Characteristics of engineers that level up

In this climate, being an above average engineer will belong to those that protect understanding as a first-class constraint.

You refuse to conflate motion with progress.

You treat comprehension as non-negotiable, even when speed is seductive.

You validate intent because correct code solving the wrong problem is still failure

You design / articulate constraints before acceleration because unbounded speed produces chaos

You preserve coherence as systems evolve faster than human memory can track

You build systems colleagues can stand behind when scrutiny arrives.

Because scrutiny always arrives.

And when it does, the only thing that matters is whether you can explain why the code exists, how it behaves, and who owns the outcome.

The work is more concentrated now at different (and higher) levels of thinking.

Ultimately, judgment is what separates reliable software from fragile systems.

Start with one question. The Intent Question. Apply it to AI-generated changes for one week. See what it reveals.

And decide if you can afford to ship code you do not fully understand.

Reply